Instance Segmentation of Unlabeled Modalities

via Cyclic Segmentation GAN

5 Donostia International Physics Center 6 University of the Basque Country 7 Ikerbasque, Basque Foundation for Science

8 HHMI 9 Boston College

Abstract

Instance segmentation for unlabeled imaging modalities is a challenging but essential task as collecting expert annotation can be expensive and time-consuming. Existing works segment a new modality by either deploying a pre-trained model optimized on diverse training data or conducting domain translation and image segmentation as two independent steps. In this work, we propose a novel Cyclic Segmentation Generative Adversarial Network (CySGAN) that conducts image translation and instance segmentation jointly using a unified framework. Besides the CycleGAN losses for image translation and supervised losses for the annotated source domain, we introduce additional self-supervised and segmentation-based adversarial objectives to improve the model performance by leveraging unlabeled target domain images. We benchmark our approach on the task of 3D neuronal nuclei segmentation with annotated electron microscopy (EM) images and unlabeled expansion microscopy (ExM) data. Our CySGAN outperforms both pre-trained generalist models and the baselines that sequentially conduct image translation and segmentation. Our implementation and the newly collected, densely annotated ExM nuclei dataset, named NucExM, are publicly available.

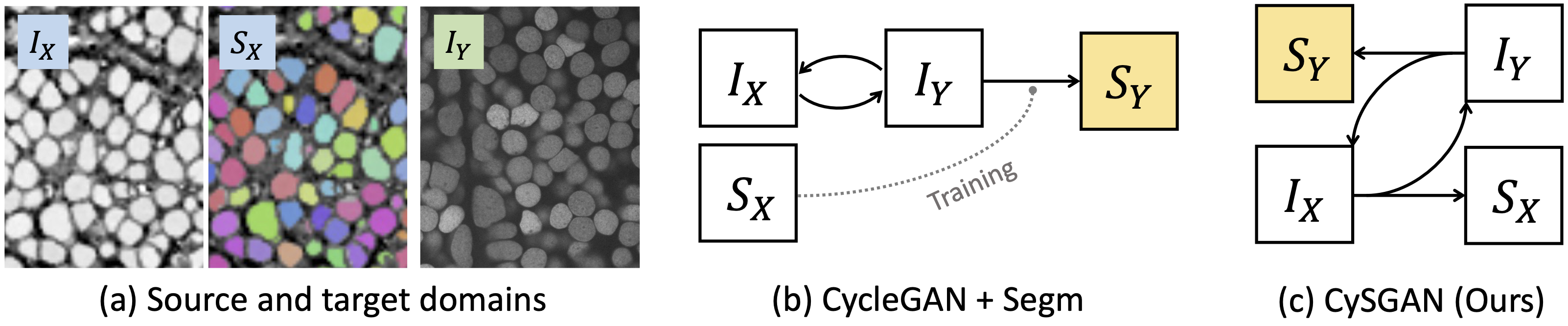

Overview of task and methods. (a) We aim to segment an unlabeled target domain by leveraging the images and masks in the source domain. Instead of (b) conducting image

translation (e.g., via CycleGAN) and instance segmentation as two separate steps, we propose (c) the CySGAN framework to unify the two functionalities, optimized with both

image translation as well as supervised and semi-supervised segmentation losses.

Method

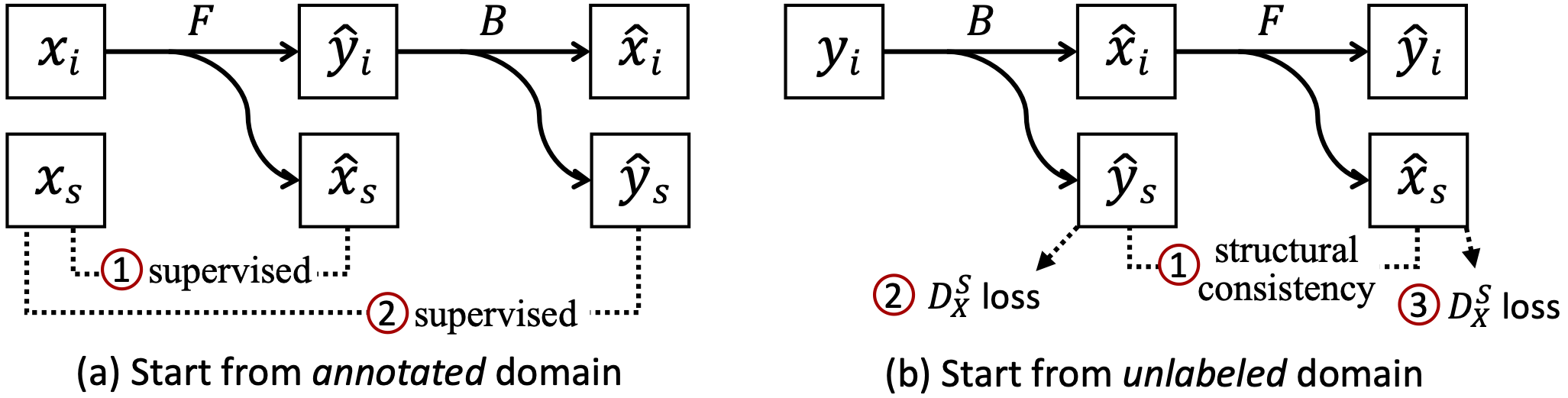

We show the training objectives (image-translation losses are omitted for simplicity) as well as the learn-to-restore task.

Segmentation losses. (a) For an annotated image in X, we compute the supervised losses of predicted representations against the label. (b) For an unlabeled image in Y, we enforce structural consistency between predicted representations (as the underlying structures should be shared) and also adversarial losses to improve the quality of predictions in the absence of paired labels.

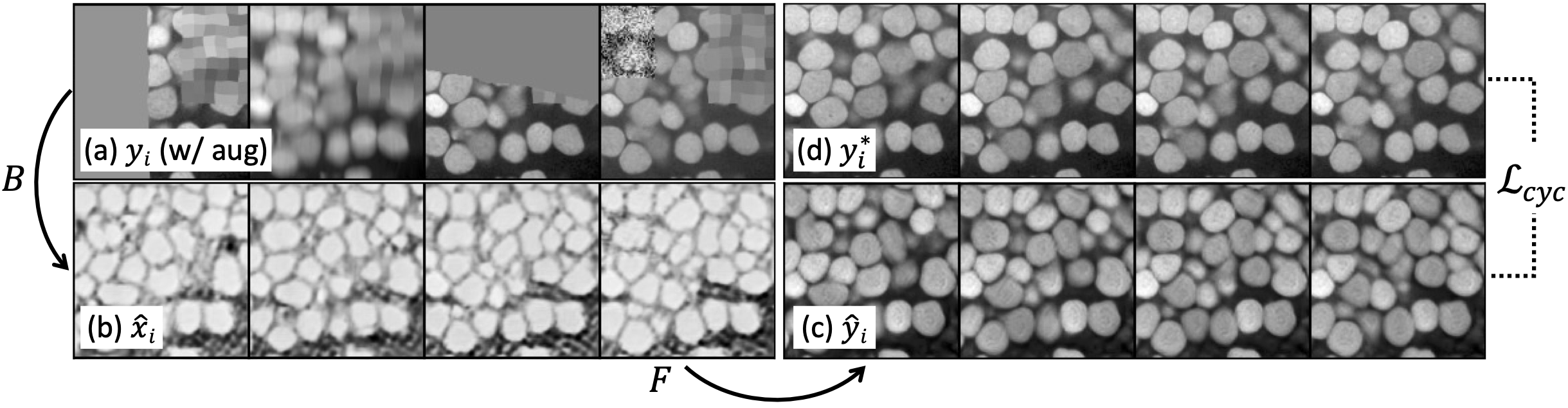

Training augmentations. We show four consecutive slices of (a) augmented real Y input, (b) synthesized X volume, (c) reconstructed Y volume and (d) real Y volume w/o augmentations. By forcing the cycle consistency of (c) to (d) instead of the augmented input (a), the model learns to restore corrupted regions with 3D context.

Results

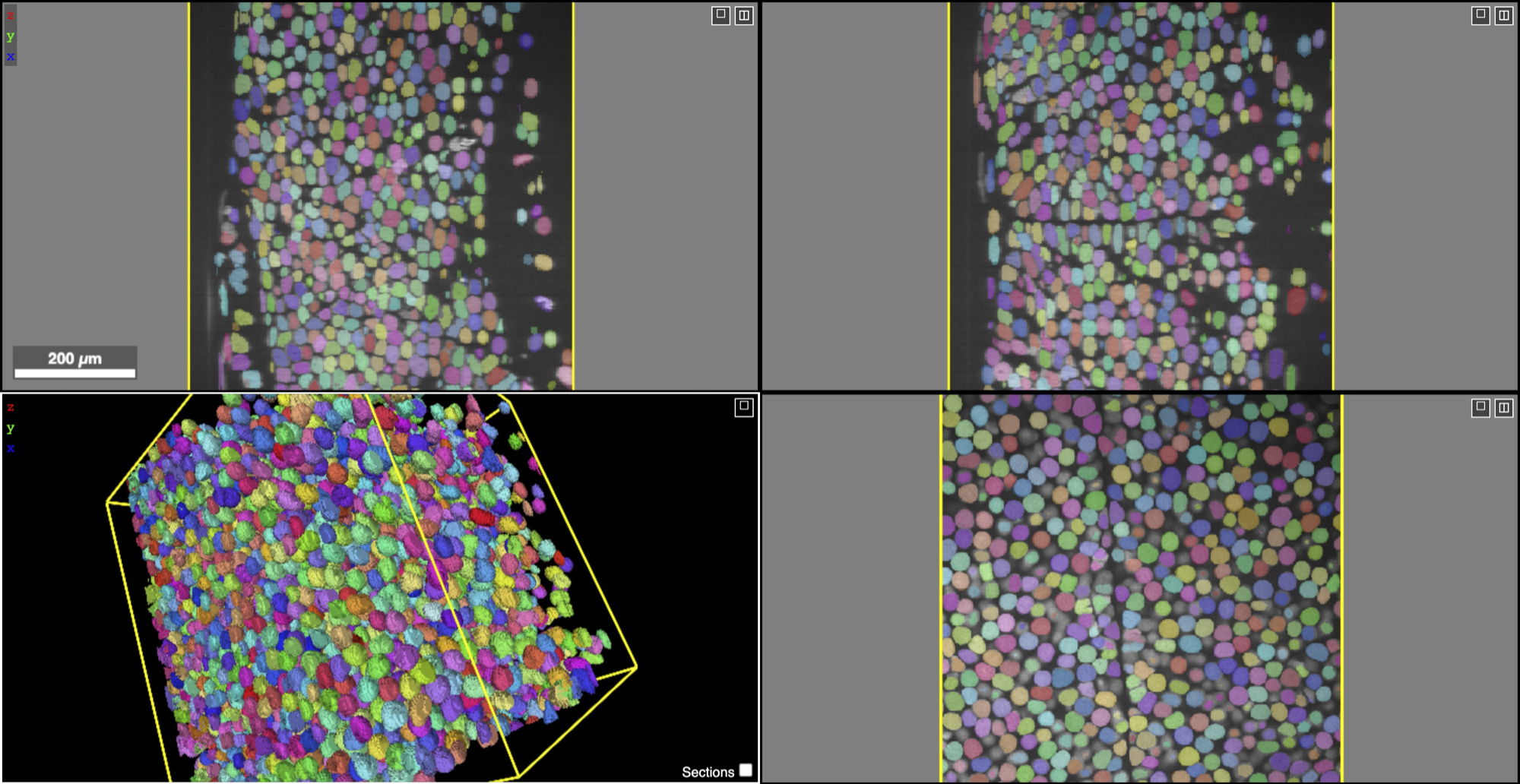

Multi-view visualization and 3D meshes of NucExM. We show the composite views of microscopy images and instance masks. We generated the visualizations using the Neuroglancer (https://github.com/google/neuroglancer).

Citation

@article{lauenburg2022instance,

title={Instance Segmentation of Unlabeled Modalities via Cyclic Segmentation GAN},

author={Lauenburg, Leander and Lin, Zudi and Zhang, Ruihan and Santos, M{\'a}rcia dos and Huang, Siyu

and Arganda-Carreras, Ignacio and Boyden, Edward S and Pfister, Hanspeter and Wei, Donglai},

journal={arXiv preprint arXiv:2204.03082},

year={2022}

}

Acknowledgement

This work has been partially supported by NSF awards IIS-1835231 and IIS- 2124179 and NIH grant 5U54CA225088-03. Leander Lauenburg acknowledges the support from a fellowship within the IFI program of the German Academic Exchange Service (DAAD). Ignacio Arganda-Carreras acknowledges the support of the Beca Leonardo a Investigadores y Creadores Culturales 2020 de la Fundación BBVA. Edward S. Boyden acknowledges NIH 1R01EB024261, Lisa Yang, John Doerr, NIH 1R01MH123403, NIH 1R01MH123977, Schmidt Futures.